This post is about the honor and experience of speaking at PASS Summit not once (2016) but twice (2017).

I recently received an email from PASS HQ that asked past speakers to share our success stories – to help others consider submitting for PASS Summit as a speaker.

This is an easy one for me – as I loved speaking at PASS Summit a lot.

Both times I learnt so many different things that helped me grow not only as a speaker but as a data platform technologist.

This is the first thing that I want to pass onto others who are considering submitting.

You will learn a lot:

When preparing a presentation you learn a lot when you prepare a presentation. You want to be ready to have questions. When selecting a topic I want to know as much about it to answer the questions attendees might have. Not just the basic questions but the more advanced ones that will help them implement/change the setup of whatever technology I am talking about.

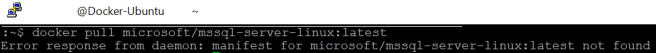

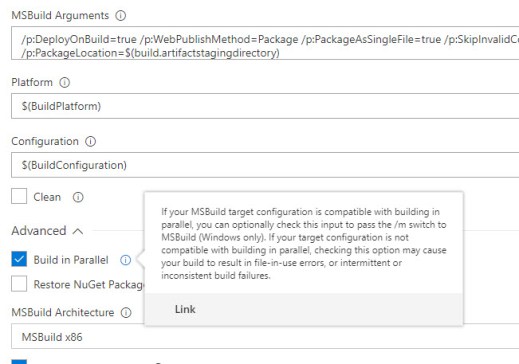

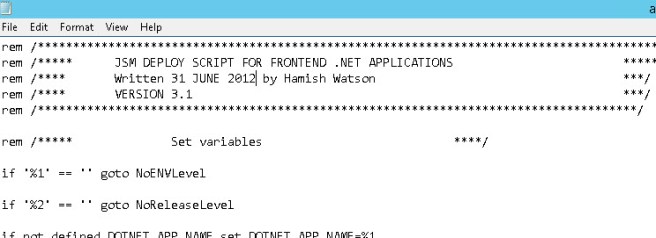

The flow on effect of this was one particular area I was talking on (SQL Server on Linux) helped the company I was working at as it changed our direction and usage of the product. Now that is definitely a win | win situation!!

As a speaker I learnt a lot about speaking to crowds of people who are engaged and also want to learn from you. This helped me grow as a speaker as I spent more time on preparation – so that I could deliver the content really well at an event like PASS Summit.

Disclaimer: I personally think I have a way to go before I’m a really effective speaker – but I speak about Continuous Improvement with technology so happy to embrace this with my speaking craft.

Here is your chance to pay it forward:

For years I had been a consumer of content, whenever I had an issue there were people who had written resolutions which had helped me with just about every part of our technology stack. I had also attended free conferences like SQLSaturday and Code Camp and had learnt so much that helped me manage/deploy/tune SQL Server.

By standing up in front of people I was replaying all the kindness of those people who had given up their time to help me. My tag line has always been “I speak so that I can help at least one person in the crowd learn..”.

The great thing has been after both my PASS Summit sessions I’ve had people stay behind and ask questions – which is great as it means that people were engaged and got something out of my session.

You are now part of a group of people who really care:

My first ever speaking engagement was with my good friend Martin Catherall, for years I had seen him speak and he was good enough to put in a co-speaking session for us both at SQLSaturday Oregon in October 2015. It was brilliant as it allowed me to try my hand at speaking with my good mate next to me for support.

By being part of the speaker group I then met some of the most awesome caring people, who really care about the community.

Start small and achieve greatness:

So let’s say you want to start speaking and giving back to the community, a great place to start and practice for speaking at PASS Summit is to support your local user group.

For a couple of reasons:

- It allows you to become an expert of your material and to grow in confidence as a speaker. Speaking to a room of 20 people whom I knew was a very rewarding experience and allowed me to get feedback on my material before going large.

- I run a user group and am always on the lookout for grass roots speakers and will support them by offering a slot at my SQL Server User Group. Because one of the hardest parts of running a User Group is finding speakers.

So you know — win | win.

After speaking at a local user group — submit to your local SQLSaturday. I also run one and for the past 3 years I have offered new speakers the chance to speak in front of a larger more disparate crowd than their local User Groups.

So go ahead — think of a topic, write an abstract and submit!!

We need speakers like you in the community and PASS Summit needs more speakers to submit — so please take the plunge. If nothing else — you now have a subject that you can support your local user groups and community conferences with.

The ultimate is that you get picked for PASS Summit and in a year or two write about your own experiences to help incubate another person to make a positive difference in our vibrant community.

Yip.

![tsql2sday150x150-1[1]](https://hybriddbablog.com/wp-content/uploads/2017/05/tsql2sday150x150-11.jpg?w=243&h=243)