The 2nd June 2017 will always be something of a special day for me.

It was the day I was awarded my Microsoft MVP for Data Platform.

Warning: this isn’t a technical blog post nor is it a complete “how to get a MVP” guide

For me – being awarded the Microsoft MVP was immensely humbling – mainly because it involved people nominating me and of course the review process of what contributions I had done in the community. The first bit was the big one for me – people nominated me because they thought I was worthy of a MVP award.

That blew me away.

And was daunting.

Daunting — because for the past 4 years I’ve been involved in the community, running conferences, running a User group and then speaking about things that had baffled me but I had worked out – so thought that others might have struggled too…

..and might want to hear my battle/war stories.

I never once did any of my community things for recognition, I did it because for years I had been selfish – I had consumed many blog posts/forums/articles that got me out of a sticky situation.

So now was my time to give back. In 2014 I made a conscious decision to start presenting and really try and help/inspire at least one person every time I talked.

My tagline was “make stuff go” as that is what all of this is about – making stuff go in the best possible,efficient manner so all of our work/life balance is “sweet as”.

And then I had my first MVP nomination last year.

Nomination:

I will always be grateful for the people who nominated me – a keyphrase of MVPs is “NDA” and for the purposes of this post I am being NDA about the people who nominated me.

You know who you are, I know who you are, heck — Microsoft know who you are!!

And I personally want to thank you for all making me speechless when I got the notification — speechless that you believed in what I was trying to do.

Here’s the thing – my first nomination I didn’t fill in the ‘paperwork’ for 2 months as I felt not worthy – especially as there was a person who I knew had been nominated and I felt that in the finite world of MVP awards they deserved it way more than myself.

I did eventually fill it out as someone told me whilst that was honorable – it was slightly dumb (their honest words not mine). I actually submitted that first one after my Ignite presentation as after the people who came up and asked/talked I felt I finally had something to share/give to the global community.

And also that date is/will be forever etched in my memory.

Finding a niche

In a community of brilliant people who can tune SQL Server, are masters of AGs and even know more than me about tempDB tuning – what did I have to offer that I knew could make a massive step change in our industry?

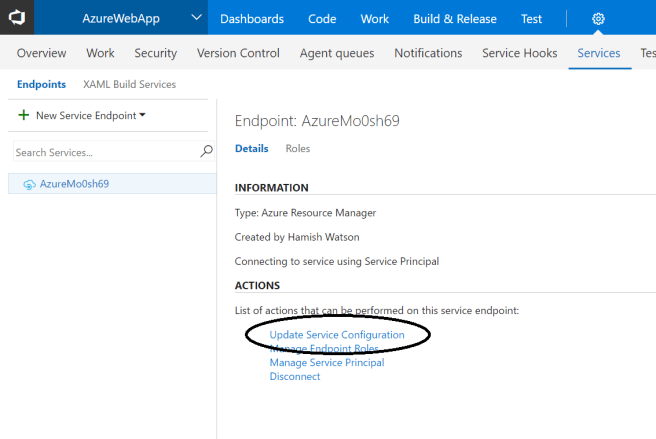

Well I had for years been working with application developers at Jade Software making stuff go. It involved things like Continuous Integration and Continuous Delivery – things associated with DevOPs.

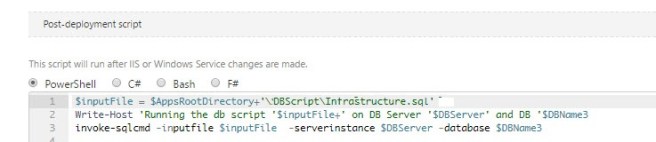

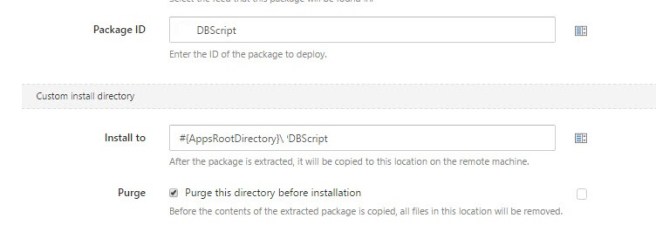

So I decided why not talk/do/share about database deploys that could have some DevOPs brilliance applied to them. For years now Application DEVs have benefited from DevOPs – whereas “databases are too hard/important” was a common phrase I heard.

I had someone once tell me “Being nominated for MVP is the easy part, so someone took 2 minutes out of their day to think about you. Well done. The hard part is proving you’re worthy of becoming a MVP“. They were right.

MVP is about Community:

I consider myself lucky and spoilt because I got to hang out with some awesome MVPs in my neck of the woods.

I’ll take one step back and mention someone who wasn’t a MVP at the time (but now is) and that’s Steve Knutson.

I’ve known Steve for 23.5 years. We met at university and over that time he’s been a mate. He helped me out when I was getting my MCSE in 1999 (he sat/passed SQL Server 6.5 exams at the time!!). We lost contact for about 7 years — but caught up again and Steve really is that nice guy you read about. He’s an extremely focused guy and willing to help others gain knowledge.

Martin Catherall (t | b) and Warwick Rudd (t | b | w) were two blokes who I made friends with (when I got up the courage to talk to a Microsoft Certified Master….) and who helped me so much over the past few years – not just with MVP related things but heaps of other things.

And that to me is what a MVP is about – someone who cares a heap about sharing their knowledge, to mentor those who don’t know things.

Combined with Rob Farley (t | b | w) , one of the most insanely brilliant intelligent quick-witted people I’ve ever met I had a triumvirate of MVP mentorship.

So I had three “local” MVPs but in fact I have 2 other people who both enriched my life both at a personal level but also at a mentor level.

Nagaraj (Raj) Venkatesan (t| b) and Melody Zacharias (t | b | w) – both of whom are like family to me. Their positiveness and support when I needed advice or just a “hey bro, how’s things” was awesome and I hope to be the same kind of mentor within the remote (global) community that you both were for me.

Now – here’s the thing – I actually had to prove that I was worth something – and that reads way worse than it sounded in my head when I wrote this……………..

Yes MVPs will help you out – but you also have to help yourself out. Life is not about spoon feeding. The guys were awesome to bounce ideas off for things I wanted to do in the community and I really would not be here without them.

Now of course there are others (a special mention to Reza Rad who I would love to emulate what he does in the Global Community) – but I’ve chosen Martin, Warwick and Rob because they were the guys I skyped, messaged and talked to the most and lads – I am so appreciative that you put up with me, that you consoled me and counselled me through the years.

The one thing I learned becoming a MVP:

Stay true to why you started doing all this.

If you’re just speaking/writing to try and rake up points or whatever to become a MVP – I’m sorry but (in my opinion) that is not a good reason to become a MVP.

While I was going through the process I stopped thinking about MVP (where I could…) and stuck to what I’d been doing the past 3 years. I continued to hone my craft, to extend my reach in the GLOBAL community and most of all to find new ways to help people “make stuff go”.

I have seen people who want to become a MVP go slightly insane about it or even to go about things the wrong way. Like really wrong..

For me in the past months gone by I decided to Forget about the MVP and to stick to First Principles – sharing knowledge is why I got into this game. Being able to see after 60 minutes that I’ve made someone have a eureka moment or epiphany — that is the goal.

I would spend lunch hours talking to anyone who needed help with DevOPs, databases or Azure. Because I finally found I had knowledge and could help/mentor others which was such a great feeling of accomplishment.

Blogging for me was a new thing – again I had been selfish over the years reading others works. Blogging allowed me to try stuff out, then write about what I’d done. Some of it was very simple stuff – but you know what – there is a place for simple technical blogs. I know because over the years I had done many searches for just simple things. SO I am finding a balance – treating my technical blogging like a journey.

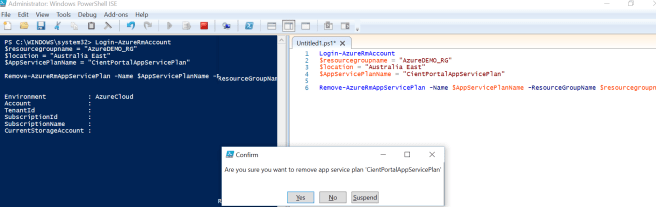

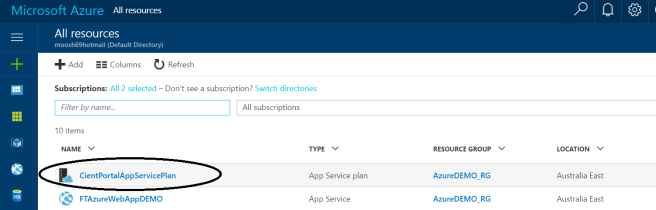

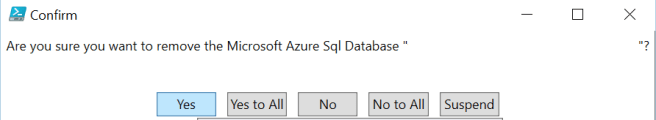

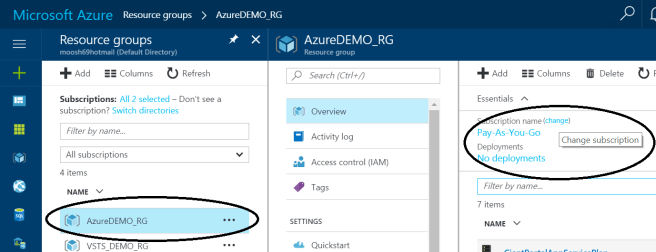

Create one database in Azure using one line of PowerShell — done

Creating many databases in Azure using one line of PowerShell — done

Creating a Continuous Delivery Pipeline in 59 minutes using PowerShell — coming soon

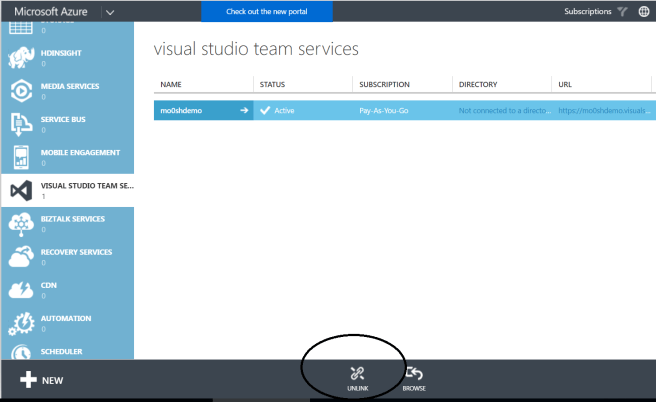

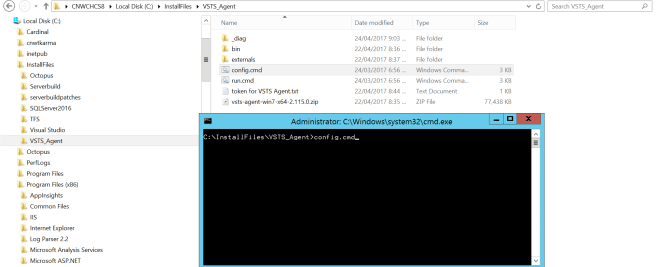

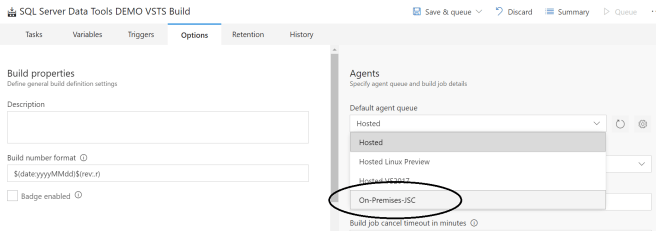

My blog post on VSTS hosted agents has got some good hits of late. That was a blog post that came out of my scrooge-like tendency to not spend money on build minutes!!

All of these things — speaking/mentoring others/writing — were my new first principles and I stuck to them. I did think about the MVP thing — I’m human, but I didn’t let it consume me.

Any consuming thoughts I would then put my energy purposely into writing/doing more content – to find better ways of delivering knowledge back to the community.

May 2017 was a massive month for me – I ran a conference, spoke 7 times and traveled to SQL Saturday Brisbane.

I was exhausted at the end of it, but so rewarded as I helped 2nd year ICT students, a Virtual Chapter, a User Group in Canada, a User Group in Christchurch, co-ran Code Camp Christchurch where I spoke twice (twice!!) and of course — the Brisbanites.

I was going to spend last weekend writing up some more content and plan for the upcoming DevOPs Boot Camp which I’m running.

But Friday morning at 6:20am I checked my emails — which I do every morning and there it was. I had been awarded the Microsoft MVP Award. For the first time in my life I was speechless. I’m man enough to admit I cried. Mostly because I didn’t think I was worthy enough to be a MVP and that other people had thought I was worthy enough.

My only regret is that I can’t tell my mum – a lady who took in the strays and unfortunates of society and endeavored to help them. She instilled in me from a young age that we were so lucky with what we had and that we have to help those who aren’t so lucky.

It stuck with me over the years and is my first principle — to help people grow.

That is why 4 days in, I have come to the place where I know I am worthy of this MVP award.

I am also excited because I am now moving from the phase of achieving a MVP award to now delivering like a MVP would.

Yip.

![tsql2sday150x150-1[1]](https://hybriddbablog.com/wp-content/uploads/2017/05/tsql2sday150x150-11.jpg?w=94&h=94)