I can’t really write about provisioning anything in Azure without mentioning Azure CLI.

My last two posts were about using

Here we will be using the Azure CLI.

Step 1: Install and configure the Azure CLI To use the Azure CLI, you first need to install it on your local machine. The Azure CLI can be installed on Windows, macOS, or Linux, and detailed instructions can be found in the Azure documentation.

Once the Azure CLI is installed, you need to log in using the az login command. This command will prompt you to enter your Azure credentials to authenticate with the Azure portal.

Step 2: Create an Azure Data Explorer cluster To create an ADX cluster, you can use the az kusto cluster create command. Here’s an example command that creates an ADX cluster named “myadxcluster” in the “East US” region with a “D13_v2” SKU and two nodes:

az kusto cluster create --name myadxcluster --location eastus --sku D13_v2 --capacity 2

This command will create an ADX cluster with the specified name, location, SKU, and node capacity. You can customize these settings to fit your needs.

Step 3: Create an Azure Data Explorer database After creating an ADX cluster, you can create a database within the cluster using the az kusto database create command.

Here’s an example command that creates a database named “myadxdatabase” within the “myadxcluster” cluster:

az kusto database create –cluster-name myadxcluster –name myadxdatabase

This command will create a new database with the specified name within the ADX cluster.

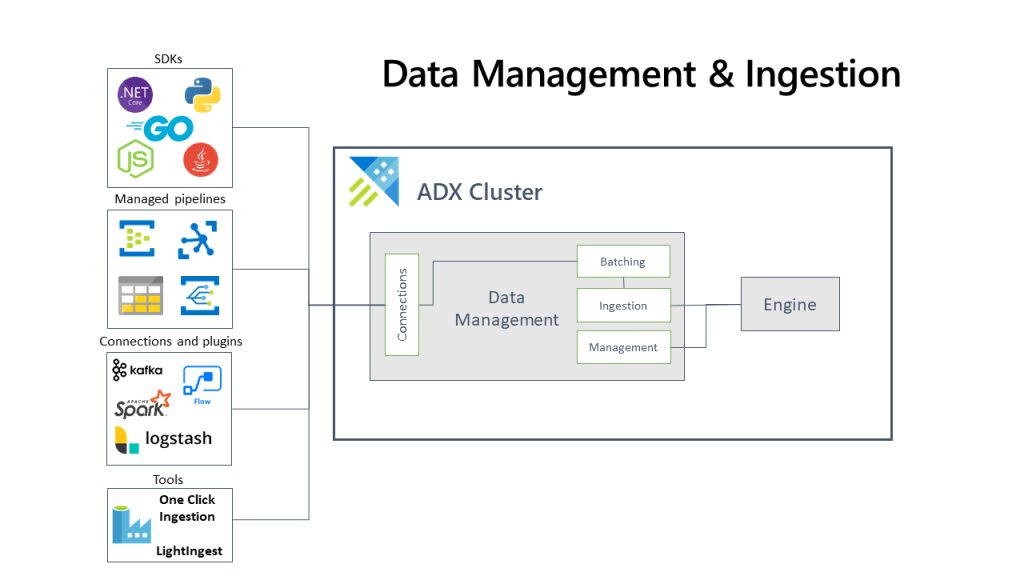

Step 4: Configure data ingestion Once you have created a database, you can configure data ingestion using the Azure Data Explorer management portal or the Azure CLI. To use the Azure CLI, you can create a data ingestion rule using the az kusto data-ingestion-rule create command.

Here’s an example command that creates a data ingestion rule for a CSV file:

az kusto data-ingestion-rule create --cluster-name myadxcluster --database-name myadxdatabase --name mydataingestionrule --data-source @'./mydata.csv' --format csv --ignore-first-record --flush-immediately --mapping 'col1:string,col2:int,col3:datetime'

This command will create a data ingestion rule named “mydataingestionrule” for a CSV file named “mydata.csv” within the specified ADX cluster and database. The data ingestion rule specifies the file format, data mapping, and ingestion behavior.

Step 5: Verify your deployment Once you have completed the above steps, you can verify your Azure Data Explorer deployment by running queries and analyzing data in the ADX cluster. You can use tools like Azure Data Studio, which provides a graphical user interface for querying and analyzing data in ADX.

Provisioning Azure Data Explorer using the Azure CLI is a pretty simple and straightforward process.

Yip.